Introducing Neutree: An Enterprise-Grade Private Model-as-a-Service Platform

When Running Models Is Easy, Turning Them into Services Is Not.

Over the past year, running LLMs has become easier than ever. With the right framework and enough GPUs, most teams can get a model to produce tokens in their own environment.

Yet many teams share a similar feeling after “getting it to work”:

- The model runs, but it doesn’t quite behave like a “service”. A service implies more than producing tokens. It implies availability, scalability, observability, and orchestration.

- GPU capacity often ends up fragmented into isolated islands, making it hard to pool heterogeneous accelerators and schedule workloads consistently.

- And without a real operational layer—users, API keys, RBAC, and usage accounting—The model may run, but sustaining it across teams and use cases becomes increasingly hard.

At that point, it becomes clear: the challenge is no longer about running models. It is about operating them.

In response, teams often look for answers in the tooling itself.

They compare frameworks, adjust configurations, and try alternative deployment setups.

These choices matter, but they don’t address the core issue. The problem is the absence of a control and operations layer around the models.

Introducing Neutree

To address this gap, we built Neutree.

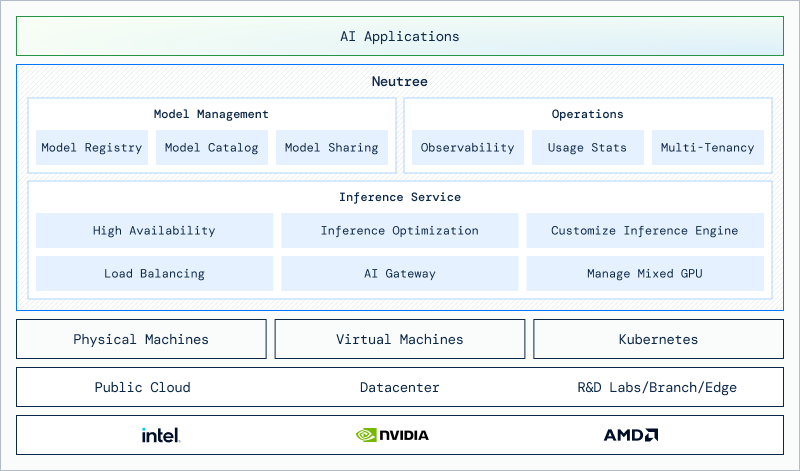

Neutree is a Model-as-a-Service platform designed for private environments.

It introduces a management layer for model inference, covering resource pooling, workload scheduling, and operational primitives such as user management, API access control, and usage statistics.

Rather than focusing on individual model deployments, Neutree treats models as managed services that can be shared, governed, and operated consistently across heterogeneous hardware.

Why Private Matters

The idea of running critical infrastructure in private environments is not new.

Public cloud platforms are widely adopted and well understood. At the same time, private infrastructure continues to exist—and evolve—for workloads that require tighter control, predictable costs, or specific compliance and data constraints.

AI services are following a similar path. Public AI services are the default choice today for their accessibility and ease of integration.

But as AI systems become more deeply embedded into products and operations, we expect private AI services to become increasingly common.

What Neutree Focuses On

End-to-End Private Deployment

Neutree is designed to support end-to-end deployments within private environments.

It pools accelerator resources into a unified AI cluster, regardless of whether they originate from physical machines, virtual machines, or Kubernetes-based setups. This allows existing infrastructure to be managed consistently, while maximizing flexibility and utilization of valuable compute resources.

Neutree also provides a private model registry to manage model weights. By keeping both compute resources and model artifacts within the same system, model deployment and operation remain fully self-contained.

Production-Ready Inference Services

Neutree does not replace existing inference engines. Instead, it integrates with widely used engines such as vLLM and llama.cpp, and provides the production capabilities required to operate them reliably as services.

Neutree’s inference services support multi-replica high availability and horizontal scaling. Scheduling and load-balancing decisions are made with awareness of runtime characteristics, including KV-cache aware routing.

Observability is built into the system through metrics, logs, and health checks, making inference services easier to operate and reason about under real workloads.

Operational Controls by Design

Neutree provides the operational controls required when models are shared across users and teams.

It supports multi-tenancy and fine-grained role-based access control (RBAC), allowing access to models and resources to be managed explicitly. Usage statistics are collected at the platform level, making resource consumption visible and auditable over time.

Open Source and Origins

Neutree is developed as an open-source project.

The AI ecosystem today covers many layers: models, inference engines, and application-level frameworks.

What remains relatively underexplored is a general-purpose platform layer for managing model inference as a shared, operational system.

Neutree is positioned at this layer. It is designed to be usable out of the box for common deployment scenarios, while remaining flexible enough to support customization and extension when requirements differ. An open-source foundation makes this balance possible, allowing teams to adapt the platform rather than work around it.

The project is open to contributions and is expected to evolve through practical usage and feedback from the community.

Neutree is built and maintained by Arcfra. As an infrastructure-focused company, Arcfra brings experience from building and operating IaaS and platform-level systems. This background informs how Neutree is developed, with attention to long-term maintainability, clear system boundaries, and enterprise deployment requirements.

Arcfra also ensures the continued development of Neutree and provides commercial version and support services for organizations that require them.

Getting Started

The documentation provides a practical starting point for exploring Neutree.

The Getting Started guide outlines the basic setup and concepts, with more detailed topics available as you go further.